本帖最后由 fuming2023 于 2023-11-18 17:30 编辑

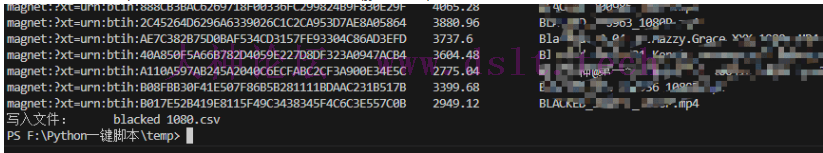

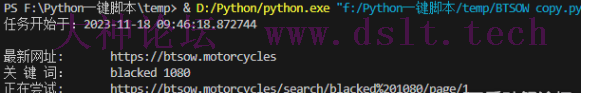

BTSOW 是个不错的种子或磁力链接搜索网站,本脚本可以按需要批量抓取 磁力链接(老司机必备),小白选手,高手莫笑!

功能:

1.获取最新地址(网站经常失联)

2.最多50页内容(网站限制)

3.结果生成CSV文件(可用excel打开后进一步筛选文件尺寸,剔除小文件)

from bs4 import BeautifulSoup

import requests

import re

import datetime

import pyperclip

import time

import subprocess

from time import sleep

import random

import csv

from urllib.parse import quote

x = datetime.datetime.now()

print('任务开始于:'+str(x)+'\n')

today=x.strftime("%Y")+'-'+x.strftime("%m")+'-'+x.strftime("%d")

#print(today)

s = requests.Session()

Headers={

'user-agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36 Edg/110.0.1587.50'

}

tellme_url='https://tellme.pw/bts'

reponse = s.get(url=tellme_url,headers=Headers)

# print(reponse.text)

soup= BeautifulSoup(reponse.text,'lxml')

# print(soup.prettify())

h2_tag = soup.find('h2')

new_url=h2_tag.a['href']

print('最新网址:\t'+new_url)

gjc=input("关 键 词:\t")

seach_url = new_url+'/search/'+quote(gjc)

# print(seach_url)

reponse=s.get(url=seach_url,headers=Headers)

# print(reponse.text)

mag=[]

title=[]

sizenum=[]

date=[]

# pagination pagination-lg

# /html/body/div[2]/div[7]/ul/li[1]

# body > div.container > div:nth-child(9) > ul > li.hidden-xs.active

maxpage=0

# maxpage<=50

for p in range(1,52):

html=s.get(seach_url+'/page/'+str(p),headers=Headers)

allhtml=html.text

if p==1:

print('正在尝试:\t'+seach_url+'/page/'+str(p))

soup= BeautifulSoup(allhtml,'lxml')

data_list=soup.find_all("a",{"href":re.compile("\/\/btsow\.*?"),"title":re.compile('.*?')})

for i in data_list:

mag.append('magnet:?xt=urn:btih:'+i.get('href')[-40:])

title.append(i.get('title'))

soup=BeautifulSoup(str(i),"lxml")

size=soup.find("div",{"class":re.compile('.*?size')})

info=size.get_text().split(" / ")

sizen=info[0][5:-2]

sizedw=info[0][-2:]

if sizedw=="TB":

sizenum.append(float(sizen)*1024**2)

elif sizedw=="GB":

sizenum.append(float(sizen)*1024)

elif sizedw=="MB":

sizenum.append(float(sizen))

elif sizedw=="KB":

sizenum.append(float(sizen)/1024)

else:

sizenum.append(0)

date.append(info[1][13:])

if p > 1:

soup=BeautifulSoup(allhtml,'lxml')

page=soup.find("ul",{"class":'pagination pagination-lg'})

# if page.txt.find("Next")

if page == None:

maxpage=p-1

break

else:

print('正在尝试:\t'+seach_url+'/page/'+str(p))

soup= BeautifulSoup(allhtml,'lxml')

data_list=soup.find_all("a",{"href":re.compile("\/\/btsow\.*?"),"title":re.compile('.*?')})

for i in data_list:

mag.append('magnet:?xt=urn:btih:'+i.get('href')[-40:])

title.append(i.get('title'))

soup=BeautifulSoup(str(i),"lxml")

size=soup.find("div",{"class":re.compile('.*?size')})

info=size.get_text().split(" / ")

sizen=info[0][5:-2]

sizedw=info[0][-2:]

if sizedw=="TB":

sizenum.append(float(sizen)*1024**2)

elif sizedw=="GB":

sizenum.append(float(sizen)*1024)

elif sizedw=="MB":

sizenum.append(float(sizen))

elif sizedw=="KB":

sizenum.append(float(sizen)/1024)

else:

sizenum.append(0)

date.append(info[1][13:])

sleep(random.randint(1,10))

# soup= BeautifulSoup(allhtml,'lxml')

# # print(soup.text)

# # print(soup.get_text)

# # data_list=soup.find("div",{'class':"data-list"})

# # print(data_list)

# # print(type(data_list))

# # soup= BeautifulSoup(data_list.text,"lxml")

# data_list=soup.find_all("a",{"href":re.compile("\/\/btsow\.*?"),"title":re.compile('.*?')})

# for i in data_list:

# mag.append('magnet:?xt=urn:btih:'+i.get('href')[-40:])

# title.append(i.get('title'))

# soup=BeautifulSoup(str(i),"lxml")

# size=soup.find("div",{"class":re.compile('.*?size')})

# info=size.get_text().split(" / ")

# sizen=info[0][5:-2]

# sizedw=info[0][-2:]

# if sizedw=="TB":

# sizenum.append(float(sizen)*1024**2)

# elif sizedw=="GB":

# sizenum.append(float(sizen)*1024)

# elif sizedw=="MB":

# sizenum.append(float(sizen))

# elif sizedw=="KB":

# sizenum.append(float(sizen)/1024)

# else:

# sizenum.append(0)

# date.append(info[1][13:])

# sleep(random.randint(1,10))

# print("%s\n%s\n%s\nMB\n%s\n" %(mag,title,sizenum,date))

# maxindex=sizenum.index(max(sizenum))

# print('magnet:?xt=urn:btih:'+mag[maxindex] +' '+title[maxindex])

# print(len(mag))

# print(len(title))

# print(len(sizenum))

if len(mag)==0:

print('未找到任何资源')

if len(mag)>0:

print('最大页数:\t',maxpage)

print('链接数量:\t',len(mag))

print('详细内容:')

for i in range(len(mag)):

print(mag[i]+'\t'+str(sizenum[i])+'\t\t'+title[i])

if maxpage>1:

rows = zip(title, mag, sizenum ,date)

# print(len(rows))

with open(gjc+'.csv', mode='w', newline='',encoding='utf-8-sig') as file:

writer = csv.writer(file)

writer.writerow(['种子名','磁力链接', '文件大小(单位:MB)', '日期'])

for row in rows:

writer.writerow(row)

print('写入文件:\t'+gjc+'.csv')

注:若转载请注明大神论坛来源(本贴地址)与作者信息。

|

发表于 2023-11-18 17:30

发表于 2023-11-18 17:30